Did you ever want to rename an existing connection in Azure Data Studio? Unfortunately as of today (April 2020), there is no way to modify an existing connection group or data source connection using the GUI.

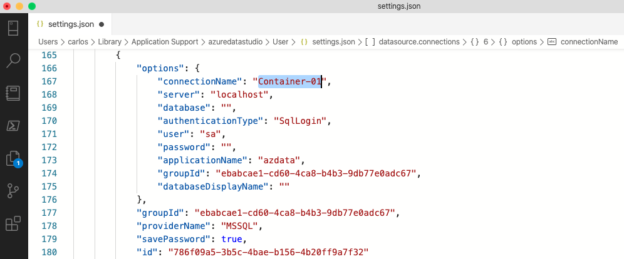

Luckily for us, Azure Data Studio (ADS) is a very powerful and flexible tool that allows us to modify many settings without the need for a GUI. All these changes can be made simple updating the settings.json file. Honestly, though, you don’t need to be an expert in JSON to make modifications to this file. I will do my best to explain to you how to take care of these changes easily and quickly.