Unless you have been living under a rock lately, you have not heard about GDPR. This has been hot topic in any technology website, blogs and social media network for the last past 2 weeks and even more today because this data regulation goes effective today (05/25).

In this post, I will do my best to condense the information about this topic and really focus on what is import, is your SQL Server environment ready for GDPR?

So, what is GDPR?

It is a data regulation fundamentally about protecting and enabling the privacy rights of individuals it also establishes strict global privacy requirements governing how personal data is managed and protected, while respecting individual choice.

Personal data in scope of the regulation can include, but is not limited to, the following:

- Name

- Identification number

- Email address

- Online user identifier

- Social media posts

- Physical, physiological, or genetic information

- Medical information

- Location

- Bank details

- IP address

- Cookies

How is this going to affect my SQL Server environment?

So, if you think how this is going to affect my SQL Server environment or how I shall prepare …. don’t you worry Microsoft has it covered. I personally think Microsoft has done a wonderful job strengthening the security features of SQL Server since the last two versions (2016, 2017), there is multiple white papers, webinars or free online training you can check and also learn more about these new security features as always encrypted, dynamic data masking, row level security.

Because GDPR is a serious matter that involves larger companies across the world, Microsoft created some kind of workflow you may want to adopt to make the GDPR compliance easy to follow up in your company. Here is the visual representation:

SQL Server provides multiple out of the box solutions that can be implemented to perform each of these recommended processes by Microsoft, so let’s take a look.

Discover:

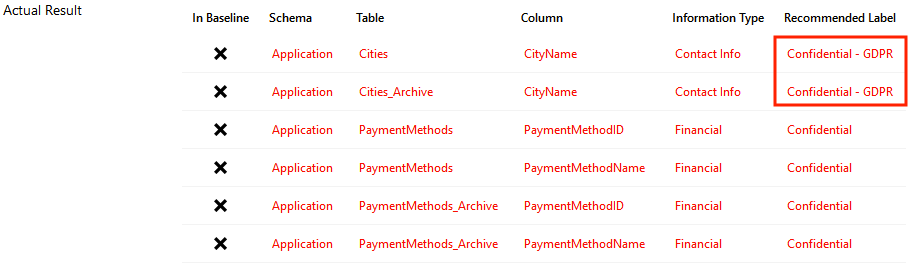

This step is about locating sensitive information and identify if that information qualifies as personal data according GDPR, you must answer the to the following questions:

- Which servers and/or databases contain personal data? Which columns or rows can be marked as containing personal data?

- Who has access to what elements of data in the database system? What elements and features of the database system can be accessed and potentially exploited to gain access to the sensitive data?

- Where does the data go when it leaves the database?

SQL Server tools you can use during the discover phase:

-

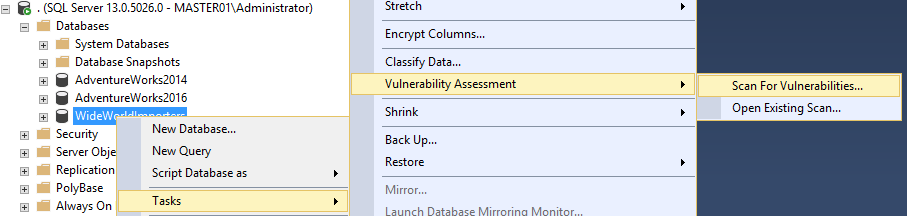

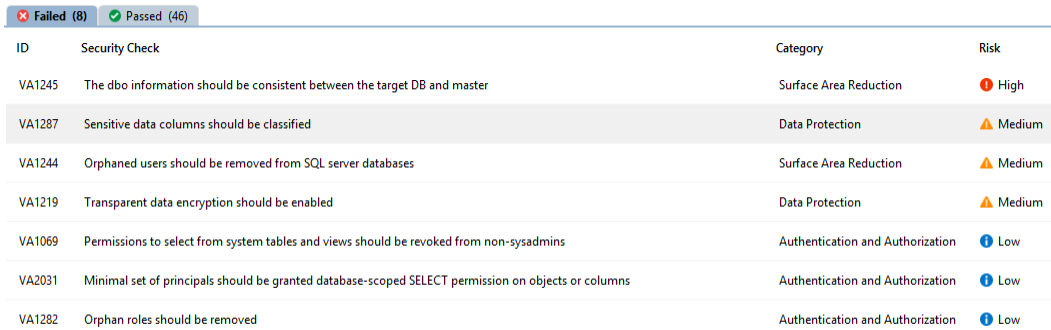

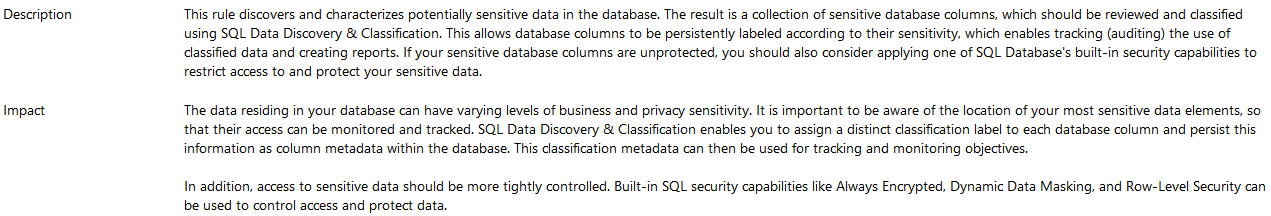

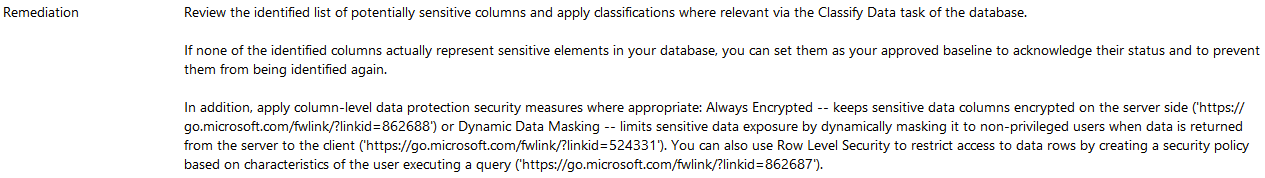

- SSMS – Vulnerability assessment, check my previous blog post about this SSMS new feature.

- Querying sys.columns to identify column names which potentially contain personal information, you can also consider using Full text search to expand your search

- Check if possible to disable some surface area features as

XP_CMDSHELL, CLR, Filestream, Cross DB Ownership Chaining, OLE AUTOMATION, External Scripts, Ad-hoc Distributed Queries.

Manage:

Once personal information is located and gaps in policies of data governance are identified during the discover phase, now its time to implement mechanisms to minimize risks from unauthorized access to data or data loss.

SQL Server tools you can use during the manage phase:

-

- SQL Server authentication, there are two modes: Windows authentication mode and mixed mode. Windows authentication is often referred to as integrated security because this SQL Server security model is tightly integrated with Windows, this is the best practice.

- Create roles to define object level permissions, granting permissions to roles rather than users simplifies security administration. Permissions assigned to roles are inherited by all members of the role, and users can be added or removed to a role to include them in a permission set.

- Use server-level roles for managing server-level access and security, and database roles for managing database level access.

- Azure SQL Database firewall, at logical instance and database level. This way only authorized connections have access to the database, and align with the GDPR requirements.

- Dynamic data masking, to limit sensitive information exposure by masking the data to non-privileged users or applications.

- Row level security, to restrict access according to specific user entitlements.

Please stay tuned for the part 2 of this post, thanks for reading!

Carlos Robles is a Solutions Architect at AWS, a former Microsoft Data Platform MVP, a Friend of Redgate, but more than anything a technology lover. He has worked in the database management field on multiple platforms for over ten years in various industries.

He has diverse experience as a Consultant, DBA and DBA Manager. He is currently working as a Solution Architect, helping customers to solve software/infrastructure problems in their on-premise or cloud environments.

Speaker, author, blogger, mentor, Guatemala SQL User group leader. If you don’t find him chatting with friends about geek stuff, he will be enjoying life with his family.